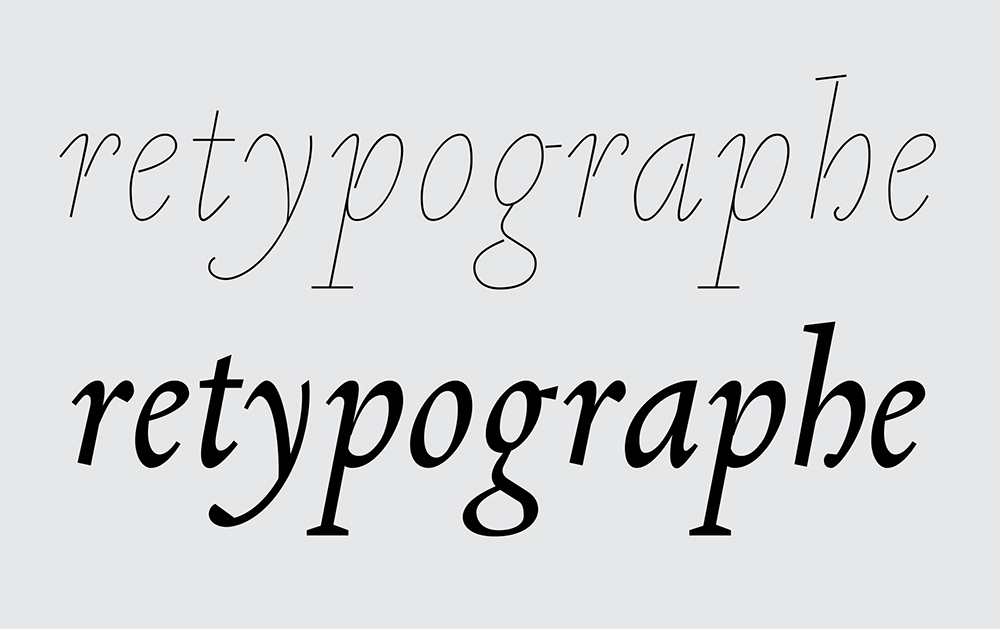

Facsimiles made available on online platforms like Gallica and Project Gutenberg are sometimes associated with text documents generated by optical character recognition (OCR) software, however such documents are removed from their initial typographical format. Considering that current digitization technologies for recording old books dissociate content from form, the aim of the Re-typographe 11 http://b-o.fr/retypographe Project is to create a computer program capable of dynamically vectorizing the typography of Renaissance books. Since 2013, Re-typographe has been in the hands of the Nancy ANRT 22 Atelier national de recherche typographique. in partnership with the LORIA. 33 Laboratoire lorrain de recherche en informatique et ses applications. Coordinated by Thomas Huot-Marchand and Bart Lamiroy, it was initially directed by Thomas Bouville from 2013–2014, then by David Vallance from 2014–2015. This article describes the initial research regarding the re-creation and digitization of the characters, an aspect of the work that tackled a series of very complex challenges.

A Question of Distance

Transcribing an old typeface and rendering it in a current technological standard is a common practice in the field of type design. Homage, restoration, resurrection, adaptation, imitation and reinterpretations are some of the many ways of approaching and relating to the original that affect reproduction. 44 John Downer draws up a typology of the positions that designers can take with historical sources in the type specimen booklet for Tribute (Emigre Foundry), see: John Downer, “Call It What It Is,” Introducing “Tribute”—a family of 8 fonts by Frank Heine, released by Emigre Fonts (Sacramento: Emigre, 2003). In all these cases, the exercise is necessarily tinged by a conspicuous degree of subjectivity on the part of the interpreter.  In Re-typographe, re-creation respects the topography of the text (its visual elements and their placement) and the absence of certain characters (k, w, @, etc.) in period alphabets, while imperfections of lead type or metal printing are eliminated.

In Re-typographe, re-creation respects the topography of the text (its visual elements and their placement) and the absence of certain characters (k, w, @, etc.) in period alphabets, while imperfections of lead type or metal printing are eliminated.

If this work context already delineates the distance there should be between the program’s end product and the source, this nevertheless remains relative. What are we to regard as “imperfections”? What is to be done with inking differences between the lines? How are badly printed letters to be managed, and letters whose punches have been broken? These questions have been explored using two-character digitization methods, each one based upon characters grouped by formal similarities.

Refining Shapes

The first method consists in calculating the average forms of the bitmap images of each group of letters. The result provides a stable image, stripped of the variations inherent in the printing process, but the forms obtained unfortunately present a somewhat eroded aspect. This approach does not seek to push further than the printed originals, consequently it is prone to junctions of shafted curves, which are too thick, or have “blocked” counter forms when these alterations are present in the document being analyzed. What mechanisms would make it possible to refine this result? How can we produce a more relevant average form? What types of samples should be selected? What method of calculation would be the most appropriate? Would it be wise to regroup the occurrences of letters before coming up with an average?

Defining Parametric Models

The second method involves a reinterpretation of the original glyph based on the detection of a basic ductus, are applied. This makes it possible to be free of the problems associated with printing and the wear and tear of the type, but also requires accepting a certain simplification of shape. Faithfulness to the details of the original letter takes a backseat to an overall coherence. Still, one might wonder if a standardized and configured skeleton can really reproduce Renaissance forms? What is the acceptable degree of loss? To what extent is it possible to establish relations between different formal elements that compose an alphabet?

are applied. This makes it possible to be free of the problems associated with printing and the wear and tear of the type, but also requires accepting a certain simplification of shape. Faithfulness to the details of the original letter takes a backseat to an overall coherence. Still, one might wonder if a standardized and configured skeleton can really reproduce Renaissance forms? What is the acceptable degree of loss? To what extent is it possible to establish relations between different formal elements that compose an alphabet?

The Eye of the Machine

In typography, the (human) eye often has the last word. So, using words, how are we to define the result expected from a program for generating characters? Do objective criteria for a “right” typographical form exist? What procedures should be introduced to make it possible to evaluate the relevance of the different directions set forth here? How is a software to be programmed with historical and cultural information?

The typographical and technical research undertaken as part of the Re-typographe Project has begun to provide basic answers to these questions that have been compiled to assist in the creation of a functional program prototype. This first version represents an important phase, and its use will certainly provide insight on current questions, and raise new ones.