This is an excerpt of an article that originally appeared on Mediamatic and i.liketightpants.net in May 2014. 11 i.liketightpants.net is a blog founded by Eric Schrijver in 2010. (Ed.) It was written as part of a residency at BAT Editions at La Panacée, the Center for Contemporary Culture in Montpellier, France, where Eric Schrijver, Alexandre Leray and Stéphanie Vilayphiou (Open Source Publishing) began to reflect upon methods of writing online.

In 2004 I discovered the website of the Amsterdam magazine/Web platform/art organization Mediamatic. 22 L’interface de 2016 ne semble pas avoir substantiellement changée depuis 2004. (N.D.É.) […] The site opens up a whole new editing experience. In edit mode, the page looks essentially the same as on the public-facing site, and as I change the title it remains all grand and italic. I had been used to content management systems [CMS] that propose sad unstyled form-fields in a default browser style, totally dissociating the input of text from the final layout. That one can get away from the default browser style, and edit in the same style as the site itself, is nothing short of a revelation to me—even if desktop software has been showing this is possible for quite some time already. […]

That one can get away from the default browser style, and edit in the same style as the site itself, is nothing short of a revelation to me—even if desktop software has been showing this is possible for quite some time already. […]

If we look at the experience of writing on WordPress, the most commonly used blogging platform, the first thing one notes is that the place where one edits the posts is quite distinct from the place that is visited by the reader: you are in the “back end.” There is some visual resemblance between the editing interface and the article: headings are bigger than body text, italics become italic. But the font does not necessarily correspond to the resulting posts, nor do the line-width, line-height and so forth. Some other elements are not visual at all: to embed videos from YouTube and the like one uses “shortcodes.”

Technologically, what was possible in 2004 should still be possible now—the Web standards have only advanced since then, offering new functionality like contentEditable which allows one to easily make a part of a webpage editable, without much further scripting. So where are the content management systems that take advantage of these technologies? […]

The Dominant Computing Paradigm and its Counterpoint

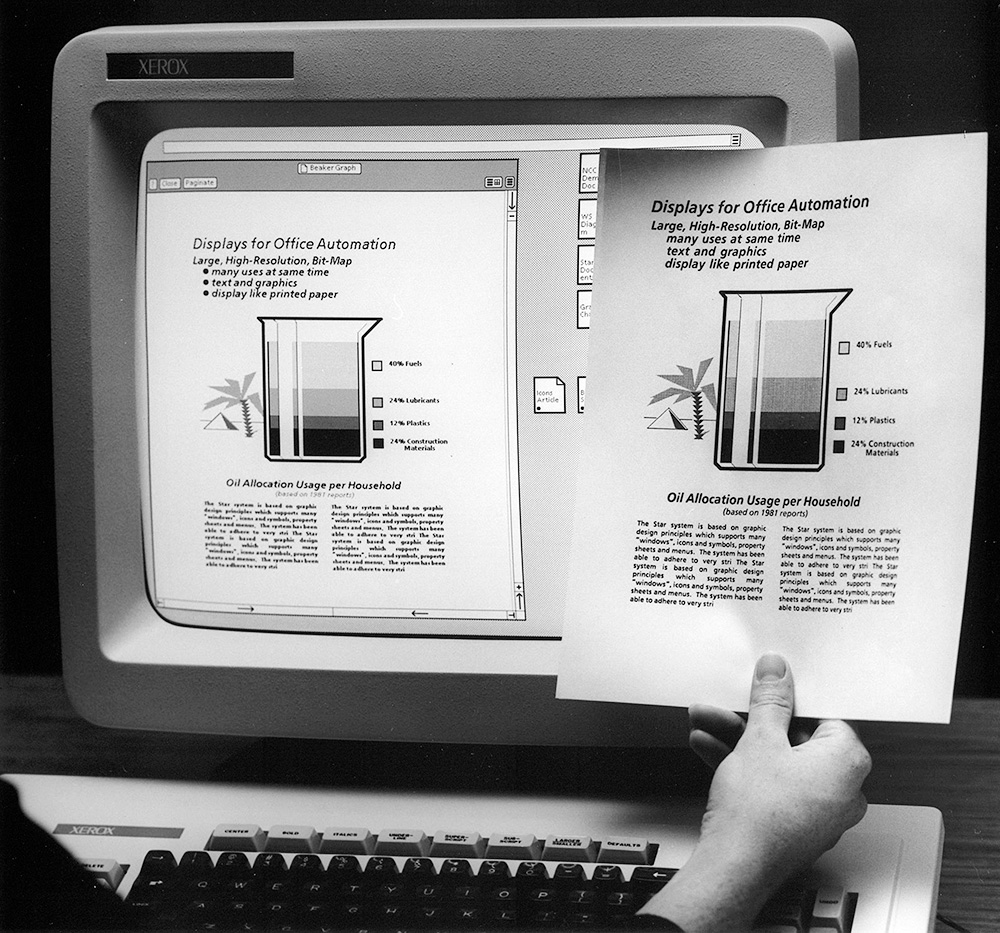

An editing interface that visually resembles its visual result is known as WYSIWYG (What You See Is What You Get). The term dates from the introduction of a graphical user interface [GUI]. The Apple Macintosh offered the first mainstream WYSIWYG programs, and the Windows 3.1 [1992] and especially Windows 95 [1995] operating systems made this approach the dominant one. A word processing program like Word has a prototypical WYSIWYG interface: you edit in an interface that closely resembles the result that comes out of the printer. Most graphic designers also work in WYSIWYG programs: this is the canvas-based paradigm of programs like Illustrator [1987], InDesign [1999], Photoshop [1990], Gimp [1995], Scribus [2003] and Inkscape [2003].

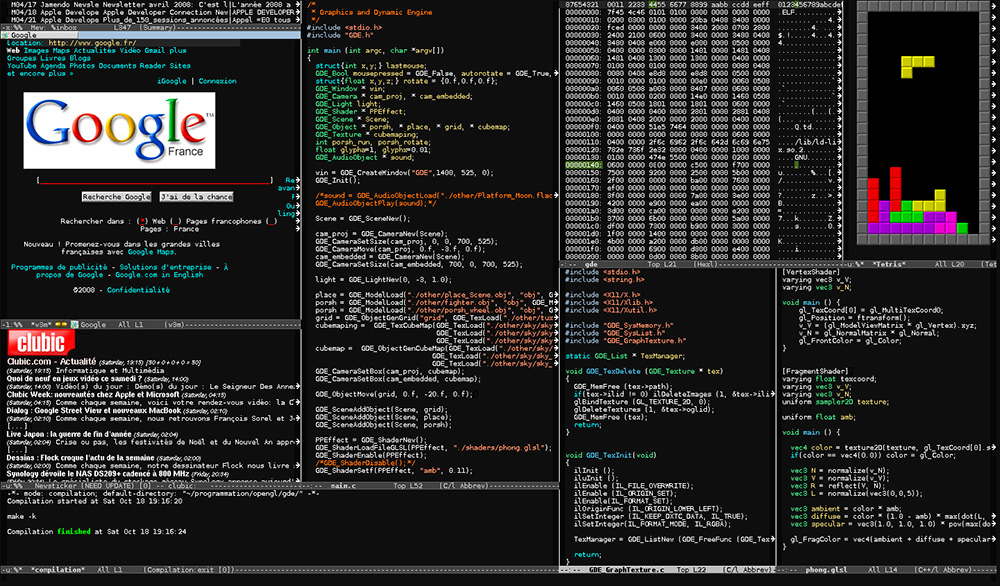

But while it is the dominant paradigm for user interfaces […], the WYSIWYG legacy is not the only paradigm in use. Programmer and author Michael Lopp, also known as Rands, tries to convince us that “nerds” use a computer in a different way. From his self-help guide for the nerd’s significant other, The Nerd Handbook:

“Whereas everyone else is traipsing around picking dazzling fonts to describe their world, your nerd has carefully selected a monospace typeface, which he avidly uses to manipulate the world deftly via a command line interface while the rest fumble around with a mouse.” 33 Michael Lopp, “The Nerd Handbook”, Randsinrepose, 2007, [En ligne], http://b-o.fr/nerd

Rand introduces a hypothetical nerd that uses a command-line or text-based terminal interface to interact with their computer. […] Yet who exactly likes to use their computer in such a way? […]

Programmers as Gatekeepers

Since the desktop publishing [DTP] revolution in the 1990s, graphic designers have been able to implement their own print designs without the intervention of engineers. In most cases this is not true for the Web: the implementation of websites is ultimately done by programmers. These programmers often have an important say in the technology that is used to create a website. It is only normal that the programmers’ values and preferences are reflected in these choices. […] In contrast with print design, the programming technologies used in creating websites (programming languages, libraries, content management systems) are almost always free software and/or open-source. Even commercial content management systems are often built upon existing open-source components. There are many ways in which this is both inspiring and practical. Yet if this engagement with a collectively owned and community-driven set of tools is commendable, it has one important downside: the values of the community directly impact the character of the tools available.

Hacker Culture

Programming is not just an activity, it is embedded in a culture. All the meta-discourse surrounding programming attributes to this culture. A particularly influential strand of computing meta-discourse is what can be called “hacker culture.” If I were to characterize this culture, I would do so by sketching two highly visible programmers that are quite different in their practice, yet share a set of common cultural references in which the concept of a “hacker” is important.

On the one hand we can look at Richard Stallman, a founder of the Free Software Movement, and tireless activist for “software freedom.” Having coded essential elements of what was to become GNU/Linux [1991], he is just as well known for his foundational texts such as the GPL license. 44 See: Richard Stallman, “On Hacking,” Stallman (2002). [Online] http://b-o.fr/on-hacking […] On the other hand, you have someone like Paul Graham, a Silicon Valley millionaire and venture capitalist. Influential in start-up culture, Graham has turned his own experience into something of a template for start-ups to follow: start with a small group of twenty-something programmers/entrepreneurs and create a company that tries to grow as quickly as possible, attract funding, and then either fail, be bought, or in extremely rare cases, become a large publicly traded company. His vision of the start-up is both codified in writing and brought into practice at the “incubator” Y Combinator [2005]. 55 This incubator has invested in more than 900 start-ups (including Airbnb, Dropbox and Scribd), and non-profit organizations. In 2016, the total valuation of these businesses exceeded $69 billion. (Ed.) […] Graham has written an article on what it means to be a hacker and has run the popular discussion forum Hacker News. 66 See: Paul Graham, “The word ‘Hacker’,” Paulgraham (2004). [Online] http://b-o.fr/hacker In fact, he refers to the people that create start-ups as hackers.

The fact that Stallman and Graham share a certain culture is shown by the fact that their conceptions of what a hacker is are far removed from the everyday usage of the world. While to most people a hacker means someone who breaks into computer systems, Stallman and Graham agree that the true sense of hacker is quite different. Thus, contesting the mainstream concept of hacker is itself important in the subculture: [author and researcher] Douglas Thomas already describes this mechanism in his thoroughly readable introduction to Hacker Culture. 77 Douglas Thomas, Hacker Culture (Minneapolis: University of Minnesota, 2002). A detailed anthropological analysis of a slice of hacker culture is performed in [anthropologist and author] Gabriella Coleman’s Coding Freedom, though it seems to focus on free software developers of the most idealistic persuasion and seems less interested in the major role Silicon Valley dollars play in fuelling hacker culture. 88 Enid Gabriella Coleman, Coding Freedom: The Ethics and Aesthetics of Hacking (Princeton: Princeton University Press, 2012).

For this tension is also at the heart of hacker culture: even if hacker culture is a place to push new conceptions of technology, ownership and collaboration, the Hacker Revolution is financed by working for “the Man.” The hacker culture that blossomed at universities in the 1960s was only possible only through liberal funding from the Department of Defense. Today many leading free and open software developers work at Google.

Grown (Wo)men Afraid of Mice

If we want to know about hacker culture’s attitude towards user interfaces, we can start to look for anecdotal evidence. In an interview about his computing habits, arch-hacker Stallman actually seems to closely resemble the hypothetical GUI-eschewing “nerd” from Rand’s article:

“I spend most of my time using Emacs 99 The Emacs program(Editing MACroS), created in 1976 by Richard Stallman, is a control terminal working in text mode, very popular with developers, which makes it possible to execute all current tasks, such as websurfing, sending emails, developing and compiling, reading files, etc.) (Ed.) [a text-editor]. I run it on a text console [a terminal], so that I don’t have to worry about accidentally touching the mouse pad and moving the pointer, which would be a nuisance. I read and send mail with Emacs (mail is what I do most of the time). I switch to the XConsole [a GUI] when I need to do something graphical, such as look at an image or a PDF file.” 1010 See the interview with Richard Stallman on The Setup: http://b-o.fr/setup

I run it on a text console [a terminal], so that I don’t have to worry about accidentally touching the mouse pad and moving the pointer, which would be a nuisance. I read and send mail with Emacs (mail is what I do most of the time). I switch to the XConsole [a GUI] when I need to do something graphical, such as look at an image or a PDF file.” 1010 See the interview with Richard Stallman on The Setup: http://b-o.fr/setup

Richard Stallman does not even use a mouse. This might seem to be an outlier position, yet he is not the only hacker to take such a position. Otherwise, there would be no audience for the open-source window manager Ratpoison [2000]. This software allows one to control the computer without any use of the mouse, metaphorically killing it.

The mouse was invented by Douglas Engelbart [in the late] 1960s. It was incorporated into the Xerox [Alto] system [in 1973] that went on to inspire the Apple Macintosh [1984]. Steve Jobs [the co-founder of Apple] commissioned [designer] Dean Hovey to come up with a design that was cheap to produce, and more simple and reliable than Xerox’s version. 1111 Malcolm Gladwell, “Creation Myth: Xerox PARC, Apple, and the Truth about Innovation,” New Yorker, May 16, 2011. [Online] http://b-o.fr/parc Editor’s Note: also see Alex Soojung-Kim Pang, “Mighty Mouse,” Alumni.stanford.edu, 2002. [Online] http://b-o.fr/mouse The mouse […] quickly spread to PCs, and became indispensable to everyday users once the Windows OS went mainstream in the 1990s. The mouse is part of the paradigm of these graphical user interfaces [WIMP], as is the WYSIWYG interaction model. The ascendancy of these interaction models is intrinsically linked to personal computers becoming ubiquitous in the 1990s. Stallman and like-minded spirits do not see themselves as part of this tradition. They relate rather to the roots of the hacker paradigm of computing that stretch back further to when computers were not yet personal, and when they ran on Unix.

Unix, Hacker Culture’s Gilgamesh

The Unix operating system plays a particular role in the system of cultural values that make up programming culture. Developed in the 1970s at AT&T, it became the dominant operating system during the mainframe era of computing. In that setup, one large computer runs the main software, and various users log in to the central computer from their own terminals. This terminal is an interface that allows one to send commands and view the results—the actual computation being performed on the mainframe. Variants of Unix became widely used in the business and academic worlds. The very first interface with the mainframe computers was the teletype: an electronic typewriter that allows one to type commands transmitted to the computer, and to subsequently print the response. As teletypes were replaced by computer terminals with CRT displays, interfaces often remained decidedly minimal.

an electronic typewriter that allows one to type commands transmitted to the computer, and to subsequently print the response. As teletypes were replaced by computer terminals with CRT displays, interfaces often remained decidedly minimal. It is much cheaper to use text characters to create interfaces than to have full-blown graphical user interfaces, especially as the state of the interface has to be sent over the wire from the mainframe to the terminal. Everyone who has worked in a large organization in the 1980s or 1990s remembers the keyboard-driven user interfaces of the time.

It is much cheaper to use text characters to create interfaces than to have full-blown graphical user interfaces, especially as the state of the interface has to be sent over the wire from the mainframe to the terminal. Everyone who has worked in a large organization in the 1980s or 1990s remembers the keyboard-driven user interfaces of the time.

This vision of computing was completely disrupted by the success of the personal computer. Bill Gates vision of “a personal computer in each home” became a reality in the 1990s. 1212 Hannah Bae, “Bill Gates [Microsoft] 40th Anniversary Email: Goal was ‘a computer on every desk’ [with Microsoft software in it],” CNN (2015). [Online] http:/b-o.fr/microsoft (Ed.) A personal computer is self-sufficient: it stores data on its own hard drive, and performs its own calculations. A PC is not hindered by the need to constantly interact with the mainframe, and as processing speed increased, PCs replaced text-based input with sophisticated graphical user interfaces.

After Windows operating systems gained ascendance, Unix became somewhat of a relic for most mainstream computer users: after conquering homes, Windows set out to conquer the workplace as well. In [1993]’s Jurassic Park, when the computer-savvy girl needs to circumvent computer security to restore power, she is surprised to find out that it runs on a Unix OS.

[In 2001], the tables turned when Apple introduced Mac OS X, an operating system based on Unix. At the same time, slowly but surely the GNU/Linux operating system made its appearance: […] a cornerstone of the movement for free and open-source software […], it is available to everyone to use, distribute, study and modify freely. Even if both these Unix-based systems are built on the same technology as the Unix that powers mainframe computers, these newer versions of Unix are used in a completely different context. GNU/Linux and OS X are designed to run on personal computers, and both come with a […] GUI, making them accessible to users that have grown up on Windows and MacOS X.

All of a sudden, a new generation began to appropriate Unix. A generation that never actually had to use a Unix system at work. Alan Kay [one of the pioneers of personal computing] 1313 Alan Kay, born in 1940, is an American computer technician who worked with Xerox in the early 1970s on the first object-oriented languages (Smalltalk). He is also one of the main designers of the PC. (Ed.) claims that the culture of programming is forgetful. 1414 Stuart Feldman, “A Conversation with Alan Kay,” Queue 2:9 (New York: ACM, 2004), 20–29. It is true that a new generation of programmers completely “forgot” Unix’s rejection by consumers only a few years before, let alone the reasons for its demise. Yet the cultural knowledge embodied in Unix is now part of a community. The way in which Unix is used today might be completely different from the 1970s, but Unix itself and the values it embodies has become something that has united different generations who identify with hacker culture. […]

Unix has been described as “our Gilgamesh epic” by [sci-fi writer] Neal Stephenson, and its status is that of a living, adored, and complex artifact. 1515 The Epic of Gilgamesh is a legendary tale of ancient Mesopotamia and one of humankind’s oldest literary works. See: Neal Stephenson, In the Beginning… Was the Command Line (New York: William Morrow, 1999). (Ed.) Its epic nature is an outgrowth of its morphing flavors, always under development, that nevertheless adhere to a set of well-articulated standards and protocols: flexibility, design simplicity, clean interfaces, openness, communicability, transparency, and efficiency. 1616 Stephenson, Ibid., and Mike Gancarz, The Unix Philosophy (Boston: Digital Press, 1995). (Ed.) As Stephenson, who is also a fan, explains: “Unix is known, loved, and understood by so many hackers that it can be re-created from scratch whenever someone needs it.” 1717 Stephenson, ibid., and Coleman, op. cit., 37. (Ed.)

The Primacy of Plain Text

If there is a lingua franca in Unix, it is plain text. Unix originated during a period when users would type in commands on a teletype machine, and typing commands is still considered an essential part of using Unix-based systems today. Many of the core Unix commands are launched with text commands, and their output is often in the form of text. This is equally true for classic Unix programs and for programs written today. Unix programs are constructed so that the output of one program can be fed into the input of another program: this ability to chain commands in “pipes” depends on the fact that all these programs share the same format of input and output, namely streams of text. The most central program in the life of a practitioner of hacker culture is the text editor. Unlike a program like Word, a text editor shows the raw text of a file, including any formatting commands. This is still the main paradigm for how programmers work on a project: as a bunch of text files organized into folders. […]

While programming, one has to learn how to create a mental model of the object programmed. 1818 See: Jeff Johnson, Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Rules (Burlington: Morgan Kaufmann, 2010). (Ed.) As the programmer only sees the codes, they have to imagine the final result while editing—then compile and run the project to see if the projection was correct. […] While WYSIWYG has a shorter feedback loop [than text-based interfaces], it also adds additional complexity. Anyone who has used Word knows the scenario: after applying several layers of formatting, the document’s behavior seems to become erratic: remove a carriage return, and the whole layout of a subsequent paragraph might break. This is because the underlying structure of Rich Text Format […] remains opaque to the user. With increased ease of use comes a number of edge cases and a loss of control over the underlying structure. This is a trade-off someone steeped in might not be willing to make. […]

Hacker Culture’s Bias is Holding Back Interface Design

[…] Shaped by the culture of Unix and plain text, and by the practice of programming, WYSIWYG interfaces are not interesting to most open-source developers. Following the mantra of “scratching one’s own itch,” developers work on the interfaces that interest them. […] [Consequently], the offer of WYSIWYG libraries is meager. Even if HTML5’s contentEditable property [that helps WYSIWYG interface design] has been around for ages, it is not used all that often; consequently there are still quite a few implementation differences between the browsers. The lack of interest in WYSIWYG editors means the interfaces are going to be comparatively flaky, which in turn confirms the suspicions of programmers looking for an editing solution that WYSIWYG is not viable. There are only two editor widgets based on contentEditable that I know of: Aloha [2010] 1919 Aloha Editor is a WYSIWYG webpage publishing engine. Developed in JavaScript, Aloha’s goal is to establish an alternative to the HTML5 contentEditable attribute. The development of the second version, whose aim was to be profitable, was stopped in May 2016. The first version, put under GPL free license, is still operating. See: http://b-o.fr/aloha (Ed.) and Hallo.js [2013]. 2020 Hallo.js is a free software (MIT License) designed to edit the content of a webpage. It is a jQuery UI plug-in developed in JavaScript initiated by Henri Bergius in 2013. See: http://hallojs.org (Ed.) Aloha is badly documented and not easy to wrap your head around as it contains quite a lot of code. Hallo.js sets out to be more lightweight, but for now is a bit too light: it lacks basic features like inserting links and images.

[…] If WYSIWYG were less of a taboo in hacker culture, we might also see interesting solutions that cross the divide of code/WYSIWYG. A great, basic example is the “reveal codes” function of WordPerfect [1980], the most popular word processor before the ascendancy of Word. 2121 See: Erik van Blokland, “The Underwater Screen or Lessons From WordPerfect,” I.like tight pants.net (2014). [Online] http://b-o.fr/wordperfect When running into a formatting problem, using “reveal codes” shows an alternative view of the document, highlighting the structure by which the formatting instructions have been applied—not unlike the “DOM inspector” in today’s browsers. More radical examples of interfaces that combine the immediacy of manipulating a canvas with the potential of the code can be found in desktop software. The 3D editing program Blender [1995] has a tight integration between a visual interface and a code interface. 2222 Software applications like AutoCAD and After Effects offer similar principles, to a certain extent. (Ed.) All the actions performed in the interface are logged in programming code, so that one can easily base scripts on actions performed in the GUI. Selecting an element will also show its position in the object model for easy scripting access.

HTML is flexible enough so that one can edit it with a text editor, but one can also create a graphical editor that works with HTML. Through the JavaScript language, a Web interface has complete dynamic access to the page’s HTML elements. This makes it possible to imagine all kinds of interfaces that go beyond the paradigms we know from Word, on the one hand, and code editors on the other. This potential comes at the expense of succinctness: to be flexible enough to work under multiple circumstances, HTML has to be quite verbose. Even though the HTML5 standard has already added some modifications to make it more sparse, it is not succinct enough for adepts of hacker culture: hence solutions like Markdown. However, to build a workflow around such a sparse plain text format is to refuse the notion that different people might want to interact with the content in a different way.

The interface that is appropriate to a writer might not be the interface that is appropriate to an editor, or to a designer. […]