What are the links between information and computing? How and why was computing invented? Are computers capable of representing data and creating forms? To learn more about these questions, the Back Office team interviewed Jean Lassègue,33 Researcher at the CNRS (Institut Marcel Mauss-EHESS) and Director of the LIAS (Linguistics, Anthropology, and Sociolinguistics) Research Team. philosopher, epistemologist, and author of a biography of Alan Turing.44 Jean Lassègue, Turing, (Paris: Les Belles Lettres, 1998).

Back Office What exactly was information for Alan Turing?

Jean Lassègue As far as I know, the word ‘information’ was rarely used by Turing—he preferred the technical notion “weight of evidence.” There are two concurrent definitions of the concept of information: Claude Shannon’s55 Claude Shannon (1921–2001) was an American electrical engineer nd mathematician. He is one of the founders of information theory, which concerns the quantification of information in terms of probabilities. (1948), who defined information as the transmission of a signal within the context of a statistical theory of communication (the rarer the appearance of a signal, the more informative it is), and the one proffered by the Russian mathematician Andreï Kolmogorov in the 1960s where information is defined as programming within the context of the theory of computability created by Turing (wherein information is the measure of the complexity of a program, the description of an object by a program being as complex as it is rich in information). Those are the theoretical aspects… However, in common parlance (and this is the meaning of the word that Turing preferred), this is not what is understood by ‘information’ which we related to the notion of meaning. In order for a piece of ‘information’ to make sense to a human, it is necessary to spacialize it, ie. to represent it within a space (a two-dimensional one in the case of graphic interfaces). Its relation to space necessarily implies a construction of form distinguishable from content: this is how meaning is constructed, which is above all geometric and non-linguistic, so consequently does not presuppose a signal, code or message, as the mathematician René Thom stated, with great insight.66 René Thom (1923–2002) is a French mathematician and epistemologist who was a founder of catastrophe theory. In summary, to signify is, at its most fundamental, to lend form to space.

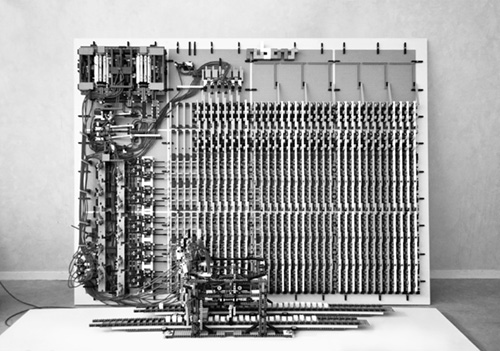

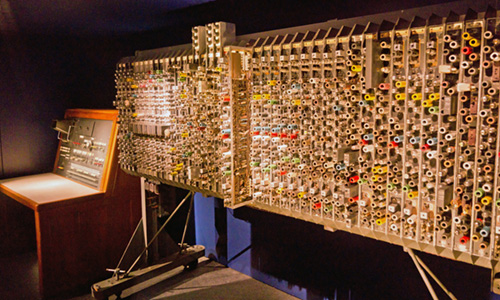

If one reflects along these lines, one might consider the tape in linear time of the machine described by Turing in 1935. He called it a “paper machine” because it consisted of an abstract mathematical machine which, initially, was not physically realized, like the extreme reduction of space to a graphic interface of only one dimension, that of the paper upon which one inscribes marks: there is either an empty box or one that is checked—that’s all. It could not be more simple. You can always achieve more complexity by adding empty boxes and checked boxes. Turing went as far as to postulate that it would be possible to simulate human ingenuity by adding new boxes to the tape.

like the extreme reduction of space to a graphic interface of only one dimension, that of the paper upon which one inscribes marks: there is either an empty box or one that is checked—that’s all. It could not be more simple. You can always achieve more complexity by adding empty boxes and checked boxes. Turing went as far as to postulate that it would be possible to simulate human ingenuity by adding new boxes to the tape.

In fact, between information as a linguistic concept and meaning as a geometric concept, there is an abyss, that of the intelligibility of space. The concept of information is not a spatial concept, but a linguistic one, and that is the reason for which it is possible for Turing to make a radical separation between the level of the software (beyond space), and the material level of hardware (within spacial constructs) within the machine that bears his name. In computing, the portability of the software is crucial: this is the capacity of a program to potentially be executable on any machine. Even if your machine and mine are not made up of the same bit of material and do not occupy the same bit of space, you can run the same software on both of them. This presupposes a hardware/software dualism that is an extraordinary tour de force, one that is incredibly productive, and whose effects we experience every day. What is astonishing, historically speaking, is that computing was born of a fantasy particular to Turing, even more pronounced than Descartes’,77 Descartes postulates the idea of a dualism between the body and soul: “each of us thus considered is truly distinct from any other thinking substance, and all other corporeal substance.” (Principles of Philosophy, Part 1, §60, 1644, trans. Aviva Cashmira Kakar). of a strict separation between the body and mind, between material and software.

Back Office In point of fact, can this “paper machine” truly be considered an interface? Can it be utilized by a human?

JL Yes and no… In theory, a human being should be capable of following the execution of a program, box by box. However, there are so many boxes to check that one is quickly obliged to delegate this task to software capable of translating what you formulate logically into code likely to be executed by an uncomprehending machine… I can cite a very simple example: in order to display Latin, Arabic or Chinese characters on a screen, you need a panoply of dedicated programs that you don’t see, that you cannot see, and that no computer analyst sees, which transform the sign carrying meaning into a mark without meaning which is only useable by a computer. It’s all well and good to be an ace in programming languages, one is still at a level where, evidently, you are not performing binary programming in terms of the boxes of the paper machine invented by Turing—it would be meaningless to do so. Consequently, when a user types in text on their keyboard, the two-dimensional alpha-numeric characters acquire a third digital dimension, linked to their encoding in signs of binary code. There is an invisible depth which means that each character will be translated on all sorts of technical levels. Today, all the systems of writing, whether alphabetic or not, are based upon this model and it’s a completely new development in the history of writing. It means that you lose control over the graphic process and you find yourselves obliged to trust existing algorithms, the developers who compiled the assembler, the constructors of the machine etc. On all these levels, one can always suppose that there has been manipulation. This depth that eludes us, linked to the extraordinary multiplication of the levels of translation, probably feeds the ambient paranoia.

BO Was the risk of the subjugation of human beings to machines already on Turing’s mind?

JL This rather metaphysical question has been asked since the 1950s. Turing considered it from a highly sociological standpoint, as he considered what human groups could take control of programming:

Roughly speaking those who work in connection with the ACE will be divided into its masters and its servants. Its masters will plan out instruction tables for it, thinking up deeper and deeper ways of using it. Its servants will feed it with cards as it calls for them. They will put right any parts that go wrong. They will assemble data that it requires. In fact the servants will take the place of limbs. As time goes on the calculator itself will take over the functions both of masters and of servants. The servants will be replaced by mechanical and electrical limbs and sense organs. […] The masters are liable to get replaced because as soon as any technique becomes at all stereotyped it becomes possible to devise a system of instruction tables which will enable the electronic computer to do it for itself. It may happen however that the masters will refuse to do this. They may be unwilling to let their jobs be stolen from them in this way. In that case they would surround the whole of their work with mystery and make excuses, couched in well chosen gibberish, whenever any dangerous suggestions were made. I think that a reaction of this kind is a very real danger. This topic naturally leads to the question as to how far it is possible in principle for a computing machine t o simulate human activities.88 Alan Turing, “Lecture on the Automatic Computing Engine,” given at the London Mathematical Society, February 20 1947 in: The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, B. Jack Copeland ed., (New York: Oxford University Press, 2004), 378-394.

will be divided into its masters and its servants. Its masters will plan out instruction tables for it, thinking up deeper and deeper ways of using it. Its servants will feed it with cards as it calls for them. They will put right any parts that go wrong. They will assemble data that it requires. In fact the servants will take the place of limbs. As time goes on the calculator itself will take over the functions both of masters and of servants. The servants will be replaced by mechanical and electrical limbs and sense organs. […] The masters are liable to get replaced because as soon as any technique becomes at all stereotyped it becomes possible to devise a system of instruction tables which will enable the electronic computer to do it for itself. It may happen however that the masters will refuse to do this. They may be unwilling to let their jobs be stolen from them in this way. In that case they would surround the whole of their work with mystery and make excuses, couched in well chosen gibberish, whenever any dangerous suggestions were made. I think that a reaction of this kind is a very real danger. This topic naturally leads to the question as to how far it is possible in principle for a computing machine t o simulate human activities.88 Alan Turing, “Lecture on the Automatic Computing Engine,” given at the London Mathematical Society, February 20 1947 in: The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, B. Jack Copeland ed., (New York: Oxford University Press, 2004), 378-394.

What is interesting is that Turing defines users as “servants,” and the programmers as “masters.” He also remarks that these very programmers can themselves become servants. As soon as there is a stereotype, there is an order and that order is programmable. This is what Turing studied with his “imitation game” (which has since passed into posterity under the inexact epithet of the “Turing test”) as delineated in an article written in 1950 where he imagines a situation that enables a human being to determine through a dialogue with an indeterminate entity whether the entity is a machine or not.99 Alan Turing, “Computing Machinery and Intelligence,” Mind, 59:236, (Oxford: Oxford University Press), 433–460. Turing’s response is ambiguous: he explicitly states that a machine could replace a human being; implicitly, he states the opposite since a computer is a deterministic machine, while the material world (ie. the human brain) is not deterministic in the same manner as the computer, and Turing concludes that the discreet-state machine that is a computer is not an adequate model to conceive biological growth.

BO On that note, what are your thoughts regarding online services such as The Grid (“AI websites that design themselves”1010 The aim of the startup *TheGrid.io* is to automate the layout and editorial content of websites. Their demo video can be seen at http://b-o.fr/thegrid) whose ambition is to replace designers with algorithms?

JL Your example, namely The Grid , aims at producing a variety of forms using a database, undoubtedly an enormous one which is being perpetually augmented and transformed using what is referred to as “deep learning” mechanisms that make use of big data. But this promise of variety necessarily implies some sort of formatting.1111 Bummykins, “Finally got to see thegrid.io sites. I think your jobs are safe.” Reddit.com, March 2016: http://b-o.fr/bummykins This reminds me of the cybernetics of the 1950s, whose goal was to model and manage the human mind. Over the last three decades it fell by the wayside since we realized that human society was more complicated than we thought and was in fact made up of a multitude of interactions that could never be fully simulated by computer code. I feel as if we are returning to this sort of archaism, whose tendency is to reduce the world in which we live to simplistic semiotics. Moreover, if we examine the visual identity of the The Grid, we find a spatial imagery that includes representations of cosmonauts, a characteristic of the era of cybernetics. It’s amusing because here the conquest of space, ie. spatiality, is a Cartesian product with its simple and calculable geometric coordinates. Undoubtedly, the limitations of this type of service will quickly become evident.

, aims at producing a variety of forms using a database, undoubtedly an enormous one which is being perpetually augmented and transformed using what is referred to as “deep learning” mechanisms that make use of big data. But this promise of variety necessarily implies some sort of formatting.1111 Bummykins, “Finally got to see thegrid.io sites. I think your jobs are safe.” Reddit.com, March 2016: http://b-o.fr/bummykins This reminds me of the cybernetics of the 1950s, whose goal was to model and manage the human mind. Over the last three decades it fell by the wayside since we realized that human society was more complicated than we thought and was in fact made up of a multitude of interactions that could never be fully simulated by computer code. I feel as if we are returning to this sort of archaism, whose tendency is to reduce the world in which we live to simplistic semiotics. Moreover, if we examine the visual identity of the The Grid, we find a spatial imagery that includes representations of cosmonauts, a characteristic of the era of cybernetics. It’s amusing because here the conquest of space, ie. spatiality, is a Cartesian product with its simple and calculable geometric coordinates. Undoubtedly, the limitations of this type of service will quickly become evident.

BO Can machines create anything unexpected?

JL It depends on how one views things… One might be surprised by the result of a program, but the program itself is not being random, it is deterministic and was made for that! True randomness is very difficult to conceptualize and, apart from the particle spins studied by CERN in Geneva, there are no programs that produce random elements of the same type. In a program, everything that is truly random is considered to be noise or garbled data, while it is in fact the noise that defines difference and enables the emergence of meaning. In my view, these surprises are, without a doubt, not to be found in the machine itself, but rather within its interaction with the human, and in the interpretation they in turn make of it. What Turing managed to understand at the end of his life is that this surprise is not psychological at all, and that it has very profound theoretical reasons that spring from the fact that nature herself is not deterministic the way a program is. This is the reason for which, towards the end of his life, Turing developed an interest in morphogenesis, the origin and development of biological forms, which is a phenomenon that occurs at the border of chaos. To wit, he wrote:

the origin and development of biological forms, which is a phenomenon that occurs at the border of chaos. To wit, he wrote:

The nervous system is certainly not a discrete-state machine. A small error in the information about the size of a nervous impulse impinging on a neuron may make a large difference to the size of the outgoing impulse. It may be argued that, this being so, one cannot expect to be able to mimic the behavior of the nervous system with a discrete-state system. It is true that a discrete-state machine must be different from a continuous machine.1414 Turing, “Computing Machinery and Intelligence,” op. cit., 451.

Moreover, if one must retain only one idea of Turing’s, it is that incalculable and calculable are like the two faces of the same coin: using calculations he consequently demonstrated that they were bound by internal limitations and that defining calculability was not so much a matter of supposing that there is something beyond calculation, but, on the contrary, of remaining strictly within the framework of calculation (that is to say the “Turing machine”) , to demonstrate by a reasoning ad absurdum that there is indeed something that escapes the math. On can thus show that there are limits to calculability—this is capital to scientifically defining a specific domain.

BO Can a programming language, which is by nature calculable, be as rich in meaning as human language is?

JL I think it’s a pity to speak of “programming languages” in the plural, because, according to me, there is only one: there is the programming language founded upon a certain class of mathematical functions, functions referred to as “calculable,”and then there are “languages,” that is to say different ways of presenting and exploiting the expressive resources of the language in question. Obviously, one cannot reform language and the expression “programming languages” used in the plural is now self-evident for all. What is extraordinary is that all these “languages” have the same capacity of calculation from a mathematical standpoint, and yet we have invented thousands of them!1515 If by any chance the latter were Turing complete, that is to say capable of representing all the calculable (recursive) functions that can be modeled in the form of a Turing machine. We thus find ourselves in a situation which seems to me to be very interesting linguistically speaking, where we have, simultaneously, a unity of computing language and an increasing diversification of what one must refer to as “programming languages.” This is exactly what we have encountered in the history of humanity: you have language along with multiple languages that continue to evolve and gain in complexity. One does not speak in the same manner everywhere because what sociologists refer to as the “division of labor” is increasingly intensive. Take for example the community of statisticians: they need to calculate averages so they require a programming language which contains a command that enables them to compute averages without having to rewrite the lines of code that enables one to make this calculation. However, if you are a webpage designer, you don’t need a command to compute averages, but rather a command to create tags, therefore you need a language adapted to your needs. But the analogy with natural languages stops there because, contrary to programming “languages” that are all univocal within their specific domain, natural languages are polysemous and in a state of continual transformation. Consequently, it is particularly difficult to conceive of a computer analysis of natural languages precisely because they are forms, and, as forms, they modify their own conditions of production, which is not the case for programming languages.

Let me cite an example. In Balzac’s work, Eugénie Grandet (1833), one finds the following phrase:

The old cooper, eaten up with ambition, was looking, they said, for a peer of France, to whom an income of three hundred thousand francs would make all the past, present, and future casks of the Grandets acceptable.1616 Honoré de Balzac, Eugénie Grandet. Scènes de la vie de Province [1833], English translation: Project Gutenberg, Katharine Prescott Wormeley, trans. March 1, 2010 [EBook #1715]. http://b-o.fr/gutenberg

Here, ‘casks’ are a sort of metaphor for a source of wealth, but you won’t find that in a dictionary, and yet this does not pose a problem. We immediately understand, without having to imagine a translation for ‘casks’ as ‘source of wealth’. The ‘wealth’ is already there, entirely contained within the ‘cask’… The manner in which language renders metaphors from its material is such that an encyclopedic approach to the lexicon will never suffice to render language completely intelligible because the transformation of its mode of production is its very engine of change. When you use words, you are simultaneously acting upon the words you select and you transform the meaning of the word in question. Will the word ‘cask’ have exactly the same meaning for you after reading Balzac’s sentence in Eugénie Grandet? I’m not sure that it will. It’s impossible to translate into formal languages since the word ‘cask’ never signified ‘fortune’ in French, but it will nevertheless remain as a sort of possible harmonic associated with the word. How can a machine comprehend this?

Interview conducted by Kévin Donnot and Anthony Masure on July 11, 2017, Paris, France.