The treatment and representation of data requires a variety of skills, from visual conception and programming to statistics and interaction design. How can one transmit these diverse areas of expertise within the sphere of ever more specialized training programs? Sandra Chamaret and Loïc Horellou, teachers at the Haute École d’Arts du Rhin (HEAR), provide a panorama of singular pedagogical contexts intended to make students aware of the issues inherent in this pluridisciplinary field.

Schools and Archives

In 2017, Douglas Edric Stanley and Cassandre Poirier-Simon, two teachers of a course in algorithmic design at the Geneva School for Art and Design (HEAD) were leading, in conjunction with the Bodmer Lab (part of the University of Geneva), a workshop based upon the information holdings of the Bodmer Foundation. This institution holds a museum and a library made up of the private collection of the bibliophile Martin Bodmer (1899–1971). The holdings consist of over 150,000 documents (precious manuscripts, first editions, etc.). The objective of the one-week workshop is to experiment with novel uses of the holdings by using innovative work methods. Three students of the group11 Mathilde Buenerd, Nicolas Baldran and David Héritier. chose to work from a corpus of several hundred editions of Faust, written by Goethe in 1808.22 This famous story details the torment of Heinrich Faust, an erudite man of science who, at the end of his life, realizes that, even with all of his knowledge, a deeper understanding of the world still eludes him and he remains incapable of enjoying life. Consequently, he sells his soul to the Devil if he can manage to free him from his sense of profound dissatisfaction.

Their project, Ultimate Faust  , consists of the creation of a program generator and a series of scripts (Photoshop and Processing) that enabled them to excerpt paratextual elements (notes, numbers, decoration, page format, even the artifacts of the scanners) from around twenty editions of Faust (in Urdu, German, or other languages where only the chapter numbers were preserved). The database, made up of an ensemble of very high definition images, are then merged in order to create a new hybrid publication that constitutes a unique element among all the possible multiples, compiling elements excerpted from the original editions, facsimiles in their original context (a detail from page twenty-eight of a source work would be imprinted in the same space as in page twenty-eight of the synthesized book). The project brings into play the notions of multiple and unique copies by creating a dialogue between various editions, throwing together eras, places, and languages and acting as a sort of mise en abyme of the utopia of universal knowledge. This unique book seeks to incarnate all the preceding editions of Faust and will consequently integrate the works of the Bodmer Collection, along with its predecessors.

, consists of the creation of a program generator and a series of scripts (Photoshop and Processing) that enabled them to excerpt paratextual elements (notes, numbers, decoration, page format, even the artifacts of the scanners) from around twenty editions of Faust (in Urdu, German, or other languages where only the chapter numbers were preserved). The database, made up of an ensemble of very high definition images, are then merged in order to create a new hybrid publication that constitutes a unique element among all the possible multiples, compiling elements excerpted from the original editions, facsimiles in their original context (a detail from page twenty-eight of a source work would be imprinted in the same space as in page twenty-eight of the synthesized book). The project brings into play the notions of multiple and unique copies by creating a dialogue between various editions, throwing together eras, places, and languages and acting as a sort of mise en abyme of the utopia of universal knowledge. This unique book seeks to incarnate all the preceding editions of Faust and will consequently integrate the works of the Bodmer Collection, along with its predecessors.

The paratextual elements are here assimilated with metadata. For some years, in major computer systems, metadata and its interconnections have ultimately become more important than the data itself. Our world is currently modeled on our metadata and it is this information that computers are capable of exploiting in order to apprehend the relationships between humans or with other machines. The collection and exploitation of metadata are, as a result, crucial matters for any organization that deals with archives.

Another educational project , conducted in the autumn of 2017 by Marina Wainer (Master 2 INA Patrimoines Audiovisuels) and Florence Jamet-Pinkiewicz (DSAA 1 Design and Digital Design, École Estienne), began precisely with using metadata as a springboard to browse the cultural heritage collection of the Centre National du Cinéma. The CNC preserves and manages all French film archives, composed of a multitude of collections of film and photographic documents (negatives, copies, working and montage stages, nitrate bases from the 1910s, etc.), for a total of approximately 150,000 films. The period spanned by the collection is quite vast, extending from the debuts of cinema to the present day, and the whole is catalogued in a database (with metadata) shared with several regional film libraries. Apart from the films themselves, all manner of cinematographic materials are contained in these databases: trailers, screen tests, cut scenes, rushes, technical documentaries, scientific films, family films, etc. All the information describing these films, notably for the purpose of identifying, then cataloguing them, then become part of the metadata: keywords resuming the film’s content, hidden elements (logo of the production company, brands, etc.), specific film collages, etc.33 See Claude Mussou and Nicolas Hervé’s presentation (INA), during the event di/zaïn #5: code(s)+data(s), February 26, 2012: http://b-o.fr/dizain The former are produced by the CNC who hold exclusive rights to them, while the films themselves remain for the most part under copyright and their distribution is subject to the rights of the authors or have fallen out of copyright and are thus in the public domain.

, conducted in the autumn of 2017 by Marina Wainer (Master 2 INA Patrimoines Audiovisuels) and Florence Jamet-Pinkiewicz (DSAA 1 Design and Digital Design, École Estienne), began precisely with using metadata as a springboard to browse the cultural heritage collection of the Centre National du Cinéma. The CNC preserves and manages all French film archives, composed of a multitude of collections of film and photographic documents (negatives, copies, working and montage stages, nitrate bases from the 1910s, etc.), for a total of approximately 150,000 films. The period spanned by the collection is quite vast, extending from the debuts of cinema to the present day, and the whole is catalogued in a database (with metadata) shared with several regional film libraries. Apart from the films themselves, all manner of cinematographic materials are contained in these databases: trailers, screen tests, cut scenes, rushes, technical documentaries, scientific films, family films, etc. All the information describing these films, notably for the purpose of identifying, then cataloguing them, then become part of the metadata: keywords resuming the film’s content, hidden elements (logo of the production company, brands, etc.), specific film collages, etc.33 See Claude Mussou and Nicolas Hervé’s presentation (INA), during the event di/zaïn #5: code(s)+data(s), February 26, 2012: http://b-o.fr/dizain The former are produced by the CNC who hold exclusive rights to them, while the films themselves remain for the most part under copyright and their distribution is subject to the rights of the authors or have fallen out of copyright and are thus in the public domain.

The student’s assignment consists of inventing a visual, dynamic system of consultation onscreen or in a physical space. The Archicompo project is made up of several interactive objects installed at the George Méliès Cinema in Montreuil. Visitors have access to images from silent films, printed on transparencies (acetate, a material that evokes film reels) with which they can construct posters for fictitious films, displayed on the screen of a video projector for collective visualization. Subsequently, a program with an integrated algorithm that recognizes images composes a fictitious scenario based upon the poster and then creates a newly edited film, with each image relating to a portion of the generated screenplay. This reworking of the data is reminiscent of the montages created for fairground films.

Both of these examples, Bodmer Lab/HEAD and CNC/Estienne, show the interest that certain institutions concerned with heritage preservation have in creating partnerships with art schools. In this type of collaboration, the extensive archives are revitalized by the student’s searches and the pedagogical projects cast a fresh eye upon the collections. As for the schools, they gain access to vast amounts of data, sometimes already catalogued and organized according to pre-established specifications.

Books and Programs

This type of workshop also provides an occasion to upend the ingrained habits of students in order to force them to question their work methods. In the case of editorial print design, the process involved in the creation of projects is often very structured: the content must be prepared and assembled, laid out using an often identical desktop publishing software, then printed. Few students envisage that the conception of a book can be programmed and automated. In effect, this automation implies that there are other ways of working where designers must forgo creating their documents manually. Procedural conception is closer to methods of webpage creation where the structure of the content is more important than its form. As is the case for the metadata of images in networks, it is the semantics of the text that becomes central, and this even if the designers are still responsible for the final format; they increasingly play more of a role of an architect of content.

Currently we observe a real desire to question the processes of visual creation, notably because the quantities of content to be managed are no longer compatible with conventional methodologies. Consequently, since 2017, the research group PrePostPrint44 The Salon de l’Edition Alternative, organized by PrePostPrint at the Gaîté Lyrique (Paris) on October 21, 2017, presented initiatives that made use of a variety of modes of publication: generative, contributive, open source, etc. See the website Prepostprint.org and the journal Code x 01 (design Julie Blanc, éditions HYX), which documents the show in the manner of a catalogue. defends an approach to experimental publication, or the same content formatted with web languages (HTML/XML) is liable to be published on different platforms and formats. PrePostPrint associates graphic designers who are experimenting with this type of process with a view to creating another way of conceiving printed objects.

Since 2015,55 2015: leWeb.imprimer(), a website had to be chosen in order to produce an edition from it, generated from source code and images from webrovers of the site. 2016: data.print(), a publication was generated from the extraction of bibliographic notes from a body of works taken from the media collections of the city of Strasbourg. 2017: mon catalogue, where the objective was to reflect generally upon the notion of the catalogue. the graphic communications workshop at HEAR offers a course in procedural publication

that is based upon the implementation of algorithms destined for the management of content and the creation of extensive data sets. For each project, the first stage of work consists of scrapping the raw data from websites, databases or a table of comma separated values (CSV), for example. This initial phase, difficult to realize manually because of the quantity of elements (several thousands), requires the use of specific tools (in particular webrovers) or programs created for the occasion (with PHP, JavaScript or Python languages).

that is based upon the implementation of algorithms destined for the management of content and the creation of extensive data sets. For each project, the first stage of work consists of scrapping the raw data from websites, databases or a table of comma separated values (CSV), for example. This initial phase, difficult to realize manually because of the quantity of elements (several thousands), requires the use of specific tools (in particular webrovers) or programs created for the occasion (with PHP, JavaScript or Python languages).

The second phase consists of dealing with this data, classifying, filtering and exporting it into XML format, which enables one to define the structure of the content independently of the final form. The images are resized, reframed and optimized with automatable programs such as ImageMagick (1990), using prepress norms. The creation of the layout only takes place after this process and consists of defining a set of layout rules based on formatted data. The application of these rules to databases constitutes the layout, strictly speaking. Automatic styles are integrated based upon the markup of data or thanks to regular expressions that enable the detection of textual recurrences. Finally, there are some marginal manual operations to be realized.

Quantified Data and Sensors

The notion of quantified data has existed since the beginnings of writing (the birth of numbers and letters is linked to the emergence of agriculture and sedentarization, notably to inventory harvests and cultivable areas…). However the emergence of big data, a consequence of the rapid development of the Web at the beginning of the 2000s, marks a turning point in the methods of treating and representing information.

These issues increase even more after the appearance of what is referred to as the Internet of Things. At the end of March 2016, the studio Chevalvert led the DataFossil workshop at Stéréolux in Nantes with a variety of participants, including students of graphic design from Marseille, who proposed to use the data from a variety of sensors to produce tangible objects that represent the materialization of this data.88 DataFossil: 29, 30 and 31 March 2016, led by Stéphane Buellet and Julia Puyo: http://b-o.fr/datafossil The manipulated data was similar to that captured by objects from our daily lives: information on luminosity, pressure, flexing, heart rates, etc. Participants were then invited to create different artifacts in volumes, creating a semblance of fossils from this civilization of the totally digital.

workshop at Stéréolux in Nantes with a variety of participants, including students of graphic design from Marseille, who proposed to use the data from a variety of sensors to produce tangible objects that represent the materialization of this data.88 DataFossil: 29, 30 and 31 March 2016, led by Stéphane Buellet and Julia Puyo: http://b-o.fr/datafossil The manipulated data was similar to that captured by objects from our daily lives: information on luminosity, pressure, flexing, heart rates, etc. Participants were then invited to create different artifacts in volumes, creating a semblance of fossils from this civilization of the totally digital.

The increased amount of data is a direct consequence of the development of connected sensors, tracking programs on the Web and exchanges of client databases. It is important for educational institutions to maintain a strong critical eye upon students regarding the ramifications of life in a digital society, notably regarding the production and distribution of personal data, the professional uses made of data from social networking and medias, the reappropriation of public data (data.gouv) or information made available to the public (i.e. the Panama and Paradise Papers). In the case of students in art and design, this critical rapport passes mainly by visual formats, from raw content that is given form and invested with meaning.

Designers and Journalists

The main players regarding data are engineers and computer scientists, but also the researchers who manipulate and visualize large quantities of data in order to gain an understanding and advance their hypotheses and research projects (whether historical, social, medical, etc.).99 See: Datalogie. Formes et imaginaires du numérique, Olaf Avenati and Pierre-Antoine Chardel, ed., (Paris: Editions Loco, 2016). Diagrams, vertical block graphs and other pie charts are visual representations invented by economists, nurses, epidemiologists, statisticians or geographers as a means of explaining their scientific methods and support their demonstrations. In the beginning, the forms were not created by designers. Today, the visualization of data is a multi-disciplinary endeavor and the roles of each are vague, and merging: engineers make decisions regarding the layout of their information, graphic designers investigate, while journalists learn to program. To complicate the situation even further, the rapid development of deep learning has also, in parallel, called into question some of the new occupations linked to the analysis of data. It remains that several new jobs will be available in the years to come, and they will most certainly require an excellent capacity to work as part of a team.

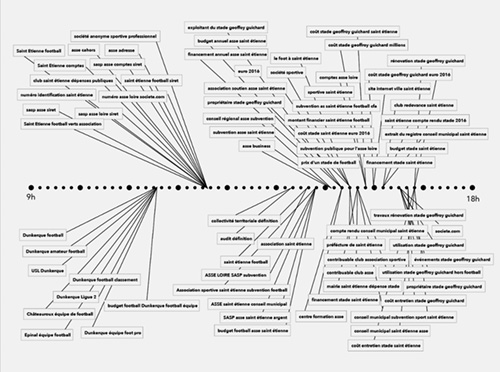

The merging of disciplines is moreover the main subject of a workshop led by the data journalist Nicolas Kayser-Bril1212 Director of Data Visualization, on the late Pure Player editorial team www.owni.fr (2009–2011). in the visual education workshop at HEAR (Strasbourg). Upon arrival, the first question he asks the students is: “Are you familiar with spreadsheets?” A priori, in an art school, working knowledge of software like Excel (1985) is not common. Over the course of three days, the group of fifteen students, graphic designers or illustrators, investigate the financing of French football teams: they comb the local press, read and analyze accessible activity reports, verify available sources, make deductions regarding missing sources, harvest, merge, and telephone to gather information from political or community figures, then organize the data collected in a cross-classification table, etc. They discover journalistic methods of investigation and fact-checking, using the tools of the profession. Some get into it, but most of them are relieved when the time comes to give form to their results. Although the guest journalist was initially surprised by the tenacity with which they unearthed information, he was above all astonished by the facility with which they immediately expressed the visual representations. Even as they learn other methods by immersion, each one remained firmly anchored within their dominant profession.

led by the data journalist Nicolas Kayser-Bril1212 Director of Data Visualization, on the late Pure Player editorial team www.owni.fr (2009–2011). in the visual education workshop at HEAR (Strasbourg). Upon arrival, the first question he asks the students is: “Are you familiar with spreadsheets?” A priori, in an art school, working knowledge of software like Excel (1985) is not common. Over the course of three days, the group of fifteen students, graphic designers or illustrators, investigate the financing of French football teams: they comb the local press, read and analyze accessible activity reports, verify available sources, make deductions regarding missing sources, harvest, merge, and telephone to gather information from political or community figures, then organize the data collected in a cross-classification table, etc. They discover journalistic methods of investigation and fact-checking, using the tools of the profession. Some get into it, but most of them are relieved when the time comes to give form to their results. Although the guest journalist was initially surprised by the tenacity with which they unearthed information, he was above all astonished by the facility with which they immediately expressed the visual representations. Even as they learn other methods by immersion, each one remained firmly anchored within their dominant profession.

This way of working, singular within the context of an art school, is a more common model in the field of journalism. Initiatives like the DDJCamp (Data Driven Journalism Camp),1313 Organized by Nika Aleksejeva, Martin Maska and Anastasia Valeeva, see: http://b-o.fr/ddjcamp an event organized in Berlin in November 2016 by the club of the young European press (Youthpress), is one example. This eight-day workshop brings together around thirty participants, mostly young journalists, developers, and some graphic designers (mostly from the École Supérieure d’Art de Cambrai). The objective was to question the way in which the question of refugees is handled on the European level and to produce articles published in partnership with European press organizations. The work methods followed the methodology used by the International Consortium of Investigative Journalists (ICIJ)1414 The International Consortium of Investigative Journalists consists of a group of journalists from various press outlets from seventy countries. They work in teams on investigations where large amounts of data with international ramifications are at play and require collaboration due to the complexity of the subject matter. See: https://www.icij.org regarding their handling of affairs such as the Panama Papers or the Paradise Papers: defining an angle for the treatment of a subject, the identification of potential resources, the gathering, treatment and culling of data, followed by analysis, writing and restitution. Each stage is accompanied by a specialist. The careful observation of this process reveals that the phase of visualization is practiced at a variety of stages of the work and also functions as an aid in the analysis (when data is so voluminous that it becomes difficult to observe anomalies other than through imaging), or as a final representation for the reader. Here, graphic artists intervene from the beginning of the process, and not merely in the final stages to create a “visual encapsulation” of sorts, as is unfortunately all too often the case.

Teams must be capable of leading their own investigative enquiries based upon the gathering of data linked to the problems of migration in different national contexts in Europe, as well as valuing the complementary skills of each of its members. The underlying objective is, above all, to introduce and confirm the status of data journalist in European publications. While this program is made up of a motley group of mobilized disciplines, they are unified by the societal themes dealt with as part of a strategy of continuous training for journalism professionals. This public, more sensitized and operationally oriented than the design students, are capable of obtaining a result in a week. The reportages produced during the workshop are consequently published as is in the international press (Denmark, Italy, Montenegro, Germany, etc.). Even if the visual quality of the productions is questionable, this process is worthy of praise. By sharing their methods of working, these students from a variety of fields have managed to bring together groups of participants around new practices by confronting them directly with a real context. As for journalists in the past, there is no doubt that the designers increasing awareness of the questions related to the treatment of data will enable them to achieve wide-ranging results of high quality in the years to come.